Republished with permission from Governing Magazine, by Carl Smith

In Brief:

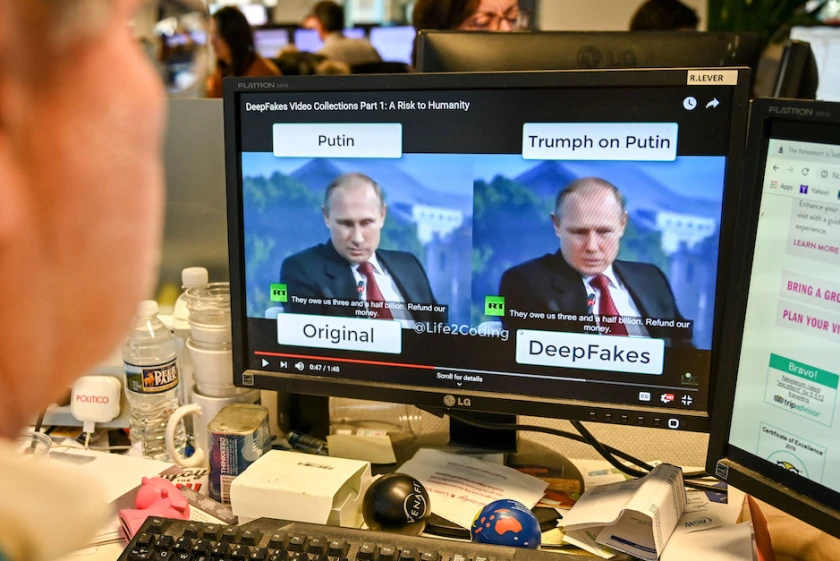

- Deepfake audio and video created with AI tools could change the course of elections and further fuel election distrust.

- There are few rules in place to discourage or prevent the creation and distribution of such content.

- Some states have taken first steps toward regulation, but meaningful remedies may lie in the hands of the tech sector.

Artificial intelligence (AI) is hardly the first breakthrough technology released into society before its impact was understood. We still have a lot to learn about human-made chemicals that have made their way into air, soil, water, food and our bodies since the 1950s. But a contentious election season is just ahead, and policymakers have to do their best to contain a force that could make things even more volatile.

A Thousand-Year Flood of Falsehoods

One big concern is that AI will generate a thousand-year flood of falsehoods and automatically pump them into systems already overwhelmed by misinformation. There aren’t really legal penalties just for saying things that aren’t true, however.

“Drawing a line in political campaigns around when you can lie and what is a lie has been so difficult that we’ve essentially said that, legally, you can lie in political campaigns,” says Gideon Cohn-Postar, legislative manager for Issue One. (Fraudulent misrepresentation, making damaging statements about a candidate or party while pretending to speak on their behalf, is an exception.)

Cohn-Postar, a historian, notes that the Reconstruction era (his specialty) was replete with lying about politics. “Trying to see the AI threat as an issue of speech that must be policed is fraught with constitutional problems.”

Crossing the Line Into Election Interference

Falsehood is one thing, but election interference is another. Last December, conservative operatives who mounted a robocall campaign designed to disenfranchise Black voters were sentenced to prison terms. Anyone who uses AI to generate robocalls for similar purposes—and steals the voices of trusted figures to make them more believable—won’t be able to stand on the First Amendment.

Deepfake videos have been in the sights of legislators for some time. In 2019, Texas became the first state to establish criminal (misdemeanor) penalties for deepfake videos created to harm candidates or influence election outcomes. A federal law enacted in the same year focused largely on assessing how foreign actors might use them.

Do communications automatically generated by human-made technology have the same “rights” as those created by humans themselves? Humans are already working overtime to clog the inboxes of election officials with frivolous yet time-consuming records requests. Should they face consequences if they use AI to raise the volume and granularity of those requests by orders of magnitude?

There’s relatively little jurisprudence on issues related to AI and elections, says Mekela Panditharatne, counsel for the Brennan Center's Democracy Program. “So there is room to some extent to move the ball and to shape this area.”

AI-generated video will become harder and harder to distinguish from real video. It's the main target of legislative efforts to prevent AI from having a disruptive effect on elections. (Alexandra Robinson/AFP/TNS)

What Have States Done?

A few states have enacted legislation to curb the influence of AI-generated messaging on their elections. The things they don’t address say as much about the “shaping” opportunity evoked by Panditharatne as what they do address.

“Some of the areas we’re particularly concerned about are voter suppression efforts that could be supercharged by material deception about election offices as well as candidates, and the use of AI in election administration,” she says.

As noted earlier, Texas election code now addresses deepfake videos. A person must create such a video and also cause it to be published or distributed within 30 days of an election to be guilty of a misdemeanor crime.

California election code extends prohibitions to both audio and video content made with AI to damage the reputation of candidates or influence voters. It lengthens the period during which these may not be distributed to 60 days before an election.

However, restrictions don’t apply if the content includes a disclosure that the content has been manipulated. (The code gives precise guidelines for disclosures). Those harmed can seek damages, but there are not criminal penalties for those who create and distribute deceptive content.

AI-manipulated content covered by Minnesota’s election code includes “any video recording, motion-picture film, sound recording, electronic image, or photograph, or any technological representation of speech or conduct.” There are criminal penalties for those who use these to influence outcomes. The code also provides for injunctive relief against persons violating its AI rules, or who are about to do so.

A Washington state bill, signed in May, also requires disclosure on election-related audio and visual material created using AI. As is the case in California it does not classify deceptive use of them as crimes, but those who are harmed can seek damages. This month, a bipartisan bill was introduced in Michigan that proposes to control the influence of AI-generated content on elections by requiring disclosures.

It's a step forward for this handful of states to take on false and misleading sights and sounds, but there’s no way to know how enforceable their regulations will be. (They won’t slow foreign actors, unfortunately.)

Moreover, people who want to influence elections are experimenting with applications that no one has thought about, says Rick Claypool, research director for the president’s office at Public Citizen. For example, a person could make an AI avatar of themselves and use it to canvass for a candidate.

“Here's the deepfake of you parroting what the campaign wants you to say,” he says. “That’s where you get into a creepy territory.”

Congress Gets Involved

In September, Sen. Amy Klobuchar introduced the Protect Elections from Deceptive AI Act, a bipartisan bill intended to contain the ability of AI to influence federal elections. “We need rules of the road in place to stop the use of fraudulent AI-generated content in campaign ads,” Klobuchar said when the bill was put forward.

The bill prohibits the use of AI-generated audio and visual media that is “materially deceptive” to influence elections or raise funds. Broadcasters and publishers are allowed to use these media in their programming if they come with specified disclosures regarding their authenticity. Those whose voice or likeness is used in a deepfake, or who have been the subject of one, can seek damages and recovery of attorney’s fees.

A proposed AI Labeling Act before the Senate would require AI content systems themselves to generate “clear and conspicuous” notices that the content produced by them is created by AI, with metadata noting the tool used to create the content and the date and time it was created. The AI Disclosure Act, a House Bill, calls for a disclaimer on any output generated by AI. The House Candidate Voice Fraud Prohibition Act seeks to prevent the distribution of AI-created audio that impersonates a candidate’s voice with intent to damage that candidate’s reputation or influence voters.

With their narrow focus, such bills might have a better chance of passing than those attempting to take on the vast ramifications of AI for jobs, entertainment and the economy. They’d fill some gaps for states that have yet to enact their own laws. At this juncture, however, it’s hard to assume anything will make it through Congress quickly.

Even in the unlikely event that Congress moves on these initiatives, are they enough?

AI was on the agenda in a September meeting of science and technology advisors to the president in San Francisco. Legislation focused strictly on containing the impact of AI on elections could have a better chance of making it into law in the near future than broad efforts to regulate technology with capabilities that are still not fully understood. (TNS)

Could Big Tech Finish What It Started?

Tech leaders have asked Congress to regulate AI, a seeming recognition that even they don’t really know what they have unleashed. When it comes to preventing election interference, the best way forward may be for the tech sector to take the lead.

Policing the outputs of AI systems is a thorny endeavor that we might not want government to undertake, says Renée DiResta, technical research manager at Stanford Internet Observatory.

There’s no gating function around AI technology itself; anyone can use it. The tech platforms that distribute content could provide a gating function for generative AI, detecting whether the accounts and networks posting content are authentic.

“Those are the rules under which Russian or Chinese bot accounts would come down,” DiResta says.

The tech sector has a general interest in making the source and history of digital content transparent. The Coalition for Content Provenance and Authenticity has developed open source technical standards for this that can “out” deepfake creations.

Google, OpenAI and other tech companies, and research partners such as DiResta are taking election issues seriously. Still, it’s difficult to say who will be able to do the work of counter-responding to misinformation that AI will make more plentiful and believable.

“This is a very transitional time with a lot of these questions, and we really don’t have the answers yet—even as there’s a critically important election looming,” DiResta says.

Governing

Governing: The Future of States and Localities takes on the question of what state and local government looks like in a world of rapidly advancing technology. Governing is a resource for elected and appointed officials and other public leaders who are looking for smart insights and a forum to better understand and manage through this era of change.