Republished with permission from Kansas Reflector, by

Facebook’s unrefined artificial intelligence misclassified a Kansas Reflector article about climate change as a security risk, and in a cascade of failures blocked the domains of news sites that published the article, according to technology experts interviewed for this story and Facebook’s public statements.

The assessment is consistent with an internal review by States Newsroom, the parent organization of Kansas Reflector, which faults Facebook for the shortcomings of its AI and the lack of accountability for its mistake.

It isn’t clear why Facebook’s AI determined the structure or content of the article to be a threat, and experts said Facebook may not actually know what attributes caused the misfire.

“It appears Facebook used overzealous and unreliable AI to mistakenly identify a Reflector article as a phishing attempt,” said States Newsroom president and publisher Chris Fitzsimon. “Facebook’s response to the incident has been confusing and hard to decipher. Just as troubling was the false information provided to our readers that our content somehow posed a security risk, damage to our reputation and reliability they still have not corrected with our followers on their platform.”

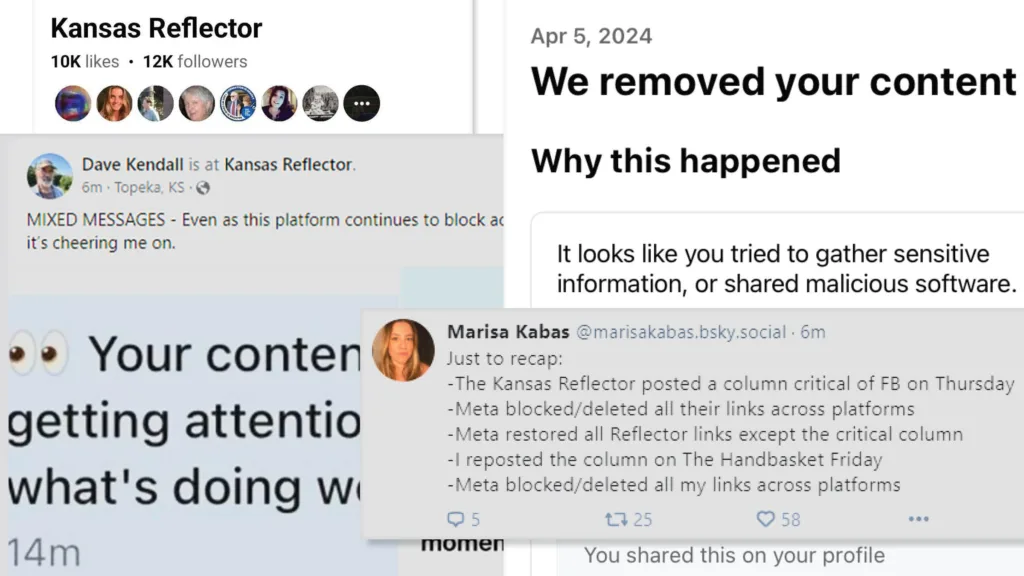

On April 4, Facebook refused to let Kansas Reflector share an opinion column written by Dave Kendall about his climate change documentary, then removed every post every user had ever made that pointed to any story on Kansas Reflector’s website. Facebook restored those posts about seven hours later, but continued to block the column.

The following day, Kansas Reflector attempted to share the column as it appeared on News From the States, which is run by States Newsroom, and The Handbasket, a newsletter run by independent journalist Marisa Kabas. Facebook rejected those posts, then removed all links pointing to both sites, just as it had done to Kansas Reflector the day before.

In removing posts, Facebook sent notifications to users that falsely identified the news sites as cybersecurity risks.

Meta—the company behind Facebook, Instagram and Threads—issued a public apology for the “security error.” But a Meta spokesman said Facebook wouldn’t follow up with users to correct the misinformation it had given them.

Kansas Reflector continues to hear from readers who are confused about the situation. Facebook’s actions also disrupted Kansas Reflector’s newsgathering operations during the final days of the legislative session and had a chilling impact on other news media.

Daniel Kahn Gillmor, a senior staff technologist at the American Civil Liberties Union, said Facebook’s actions demonstrate the danger for society of depending heavily on a single communications platform to determine what is worthy of discussion.

“That’s just not their core competency,” Gillmor said. “At some level, you can see Facebook as somebody who’s gotten out ahead of their skis. Facebook originally was a hookup app for college students back in the day, and all of a sudden we’re now asking it to help us sort fact from fiction.”

Social media posts reflect Facebook’s actions to block news sites that published Dave Kendall’s column. (Illustration by Sherman Smith/Kansas Reflector)

‘Welcome to AI’

Adam Mosseri, the head of Meta’s Instagram, attributed the error to machine learning classifiers, a specific type of AI that is trained to recognize characteristics associated with phishing scams, which try to fool people into divulging personal information.

The classifiers evaluate millions of pieces of content every day, Mosseri said in a Threads post, and sometimes they get it wrong. Mosseri didn’t respond to a Threads post seeking more detail.

Jason Rogers, CEO of Invary, a cybersecurity company that uses NSA-licensed technology and has ties to the University of Kansas Innovation Park, reviewed the Kendall column as it appeared on Kansas Reflector, News From the States and The Handbasket.

Facebook’s sensors could be sensitive to things like a high volume of hyperlinks that were included within the column or the resolution of the photos that appeared on the page, Rogers said. Still, he said, it was “strange it would be flagged as a ‘cyber’ threat by an AI.”

“Welcome to AI, and why it isn’t as ‘ready’ as some people make it out to be,” Rogers said.

He said it was possible that Kansas Reflector’s attempts to circumvent Facebook’s filter—telling people to read Kendall’s column by going to KansasReflector.com, and then trying to share the same column from other sites—may have signaled to the AI that this was the behavior of a phishing scam, causing it to block the domains for all three sites.

Sagar Samtani, director of the Kelley School of Business’ Data Science and Artificial Intelligence Lab at Indiana University, said it is common for this kind of technology to produce false positives and false negatives.

He said Facebook is going through a “learning process,” trying to evaluate how people across the globe might view different types of content and shield the platform from bad actors.

“Facebook is just trying to learn what would be appropriate, suitable content,” Samtani said. “So in that process, there is always going to be a ‘whoops,’ like, ‘We shouldn’t have done that.’ ”

And, he said, Facebook may not be able to say why its technology misclassified Kansas Reflector as a threat.

“Sometimes it’s actually very difficult for them to say something like that because sometimes the models aren’t going to necessarily output exactly what the features are that may have tripped the alarm,” Samtani said. “That may be something that’s not within their technical capability to do so.”

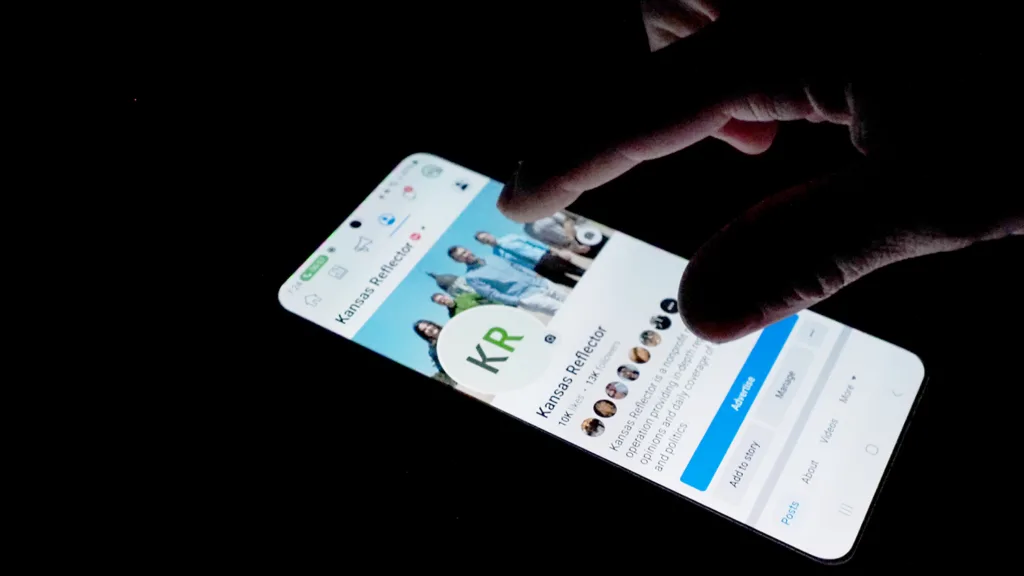

On April 4, Kansas Reflector saw all its Facebook posts deleted. The platform also blocked users from sharing links to the site. The disruption grew to include two other sites on Friday. (Sherman Smith/Kansas Reflector)

‘Where’s the Accountability’

Kendall’s column was critical of Facebook because the platform had declined to let him purchase an advertisement to promote his climate change movie. Facebook had told him the topic was too controversial.

In a pair of April 5 phone calls, Meta spokesman Andy Stone insisted Facebook’s actions against the three news sites that published the Kendall column had nothing to do with the content of the column.

Gillmor, the ACLU technologist, questioned that explanation.

“They’re acting as a filter for their readers and trying to keep readers from what they consider to be malign influences, whatever that means,” Gillmor said. “I would be deeply, deeply shocked if there was nothing that a normal human would consider ‘content’ that could trigger those detectors.”

It would actually be difficult, he said, to program the AI to ignore the semantics of an article.

“They know what kinds of reactions people have to media that they read,” Gillmor said. “They know the dwell time people have on an article. They know a lot of information. I don’t know how or why they would keep that out of their classifier.”

He also said AI systems may not be able to provide an explanation that “any normal human would understand” about why it rejected Kendall’s column and blocked the domains of news sites that published it.

Stone, the Meta spokesman, declined to answer questions for this story, including: How does Facebook think it should be held accountable for its mistake? Does Facebook actually know what caused the mistake? What changes were made to avoid the mistake happening again? Is Facebook’s Oversight Board reviewing the situation?

Gillmor’s work with the ACLU is focused on ways technology can impact civil liberties like freedom of speech, freedom of association, and privacy.

“This is a great example of one of the big problems of having so much dependence on one ecosystem to distribute information,” Gillmor said. “And the explanation that you’re getting from them is, ‘Well, we screwed up.’ OK, you screwed up, but the consequences are for everyone.”

“Where’s the accountability here?” he added. “Is Facebook going to hold the AI system accountable?”

Kansas Reflector

Kansas Reflector is a nonprofit news operation providing in-depth reporting, diverse opinions and daily coverage of state government and politics. This public service is free to readers and other news outlets.